Working in the building automation field brings many unexpected situations that need custom solutions. Let’s examine some tools that are at everyone's disposal that some might not be aware of. For those of you who share a tech mindset, I hope this brings some new ideas your way, and for those of you who do not, I hope this offers you insight into the solutions that are available. Data is the most important resource in today's modern world. We often have to pull this data out of our system to process for analysis, third-party commissioning, energy audits, etc. I wanted to share a recent event and my solution to the problem in case others have similar situations in the future.

A problem arose where an engineer needed to evaluate trends on a control system, but the data exported from the building automation system (BAS) was simply too large to open in any modern spreadsheet program, such as Excel or Apple Numbers. This data export included millions of rows of data and exceeded the limitations of these programs. Most modern spreadsheet applications tend to stop working after about 1 million rows of data. I was informed that the BAS was trending these particular gas sensors at an interval of one minute for the last few years. By the time this data was given to me, it was one large file with four to five years of one-minute-interval data.

Whenever I encounter a problem that involves processing massive amounts of data, I tend to lean on Python. Python is a scripting language that allows us to use code to do just about anything you can think of, but the beauty of it is it can be simple to get into and as complicated as a person wants to take it. Most modern universities include courses in Python not just for computer engineering majors but also for scientists and engineers.

Python also has a very abundant and accessible online community that makes learning a bit easier than other languages. Processing data, visualization, and machine learning are its strong points.

To solve the problem this engineer had, we needed to import a large CSV file into Python and find a way to divide up our data into small chunks of time. There are many tools available to run Python, such as a Jupiter notebook, which allows you to build an interactive notebook of both code and comments. You can run each command line by line, making it easy to digest the information. We will be using a module in Python called Pandas, which allows us to manipulate data easier, and a built-in module called Datetime, which will allow us to format our time series data into a standard that can be more easily understand. We will also define our input file and our output file. After that, we will read our CSV into Python.

After we read our CSV into Python, we will set our index to the timestamp column of data so the computer can understand that we are manipulating data that is based on the order of time not just the number of rows.

Once this is done, we can simply tell the computer how we want to resample our data, and how we want to export it. We will tell the computer in the code below that we want 15-minute buckets of time, and when it groups those timestamps, we want to take the average during that time. We will also point it to the “value” column and use that data to calculate our average. Our CSV is very simple; it just includes timestamp and value columns.

That’s it. Problem solved!

This is a small, simple example, and not every task is quite this straightforward, but I wanted to share the tools available that can make your life easier. By reducing our timestamps to 15-minute buckets, we now have a CSV that has been reduced by more thasn 120 MB and under 1 million rows of data. This allows engineers to view data in a way they are more comfortable with, and it did not take us much time to accommodate this request.

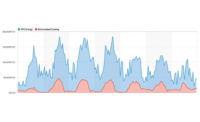

Requests like these will become more and more common as we keep evolving into a data-driven world. Knowing there are tools available like this can save us time in the future and solve unique problems in the workplace. Over the years, I have encouraged different coworkers to play around with Python, and, the majority of the time, they have been impressed with useful features. We have used Python for not only data formatting but also visualizing energy usage in buildings. I encourage everyone to expand their horizons if they find this interesting or useful and see where it can solve problems in your workplace.

James Regan has been working in the building automation industry since 2013. He started as a controls technician for Johnson Controls, working primarily in critical hospital environments. He moved on to an energy engineering role optimizing the automation systems of hospitals with a major focus on maintaining proper air quality settings as efficiently as possible. In 2018, he accepted a role as building systems analyst with Piedmont Service Group. In this role, he supported efforts to increase efficiency through identifying poor sequences, faulty field devices, or failing mechanical equipment. He currently is the analytics manager overseeing the building analytics platform where he supports Piedmont Service Group and CMS Controls with optimizing or continuous commissioning through analytics.