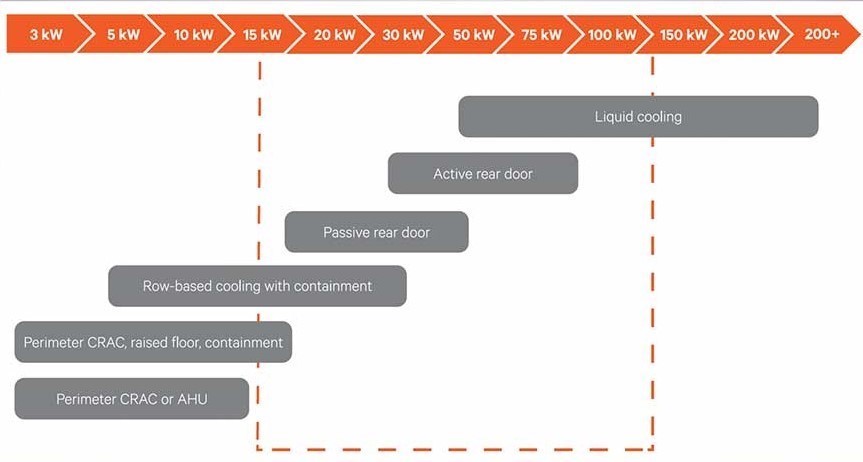

Liquid cooling is at a pivotal point in its evolution as it moves out of its niche in high-performance computing (HPC) and into a growing number of data centers. This trend is being driven by the high-density racks required to support artificial intelligence (AI) and other latency-sensitive and processing-intensive business applications. With equipment racks supporting these applications now routinely exceeding 30 kW, engineers charged with ensuring thermal management are facing the limits of air as a heat-transfer medium.

Air-cooling systems have adapted to rising rack densities through increased system efficiency, moving cooling closer to the source of heat, and employing containment. But these approaches deliver diminishing returns and suffer reduced efficiency as rack densities rise above 30 kW.

Liquid cooling offers an effective and efficient solution. Through its various configurations, liquid cooling improves the performance and reliability of high-density racks, often at a lower cost of ownership than air-cooled systems. However, introducing liquid cooling into an air-cooled facility presents a number of design challenges that must be navigated to ensure a successful deployment.

Liquid-Cooling Fluid Selection

There are three main types of liquid-cooling technology being used today: rear-door heat exchangers, which use an indirect approach, and direct-to-chip cold plates and immersion cooling, which are considered direct forms of liquid cooling. A variety of “liquids” are used in these systems depending on the technology being employed, whether a single- or two-phase process is being used, and the required heat capture capacity of the fluid. For a review of liquid-cooling technologies, see the Vertiv white paper, Understanding Data Center Liquid Cooling Options and Infrastructure Requirements.

Fluid selection is an important decision that should be made as early in the design process as possible. Different fluids have different costs, thermal capacities, and chemical compositions that must be considered when selecting materials and designing the distribution system. Service and maintenance requirements also vary based on the fluid selected, and these also need to be accounted for in the design.

Nonconductive dielectric fluids can be used in direct-to-chip cooling and are required for immersion cooling. These fluids eliminate the potential for equipment damage from a fluid leak but are expensive and may have environmental, health, and safety considerations, so similar risk-mitigation strategies should be employed with these fluids as when using water or a water/glycol mixture.

Plumbing System Design

Any material that is in contact with the fluids used must be confirmed for wetted material compatibility based not only on the specific chemical composition of the fluid but also on system temperatures and pressures. Fittings should get extra scrutiny in the material selection process, as poorly designed fittings can represent a weak spot in the fluid distribution system. Quick disconnect fittings are generally recommended to enable serviceability, and shutoff valves should be designed into the system to enable fitting disconnection and leak intervention.

Significant experience has been gained regarding material compatibility and fitting design through the many liquid-cooling deployments in HPC applications, some of which have now been in operation for more than 10 years. This experience can prove valuable when designing a liquid-cooling system today and is shared in a white paper published by the Open Compute Project. It’s also smart to seek out vendors and contractors with experience in liquid cooling beyond the technology used in the equipment rack.

For raised-floor data centers, poorly planned piping runs can create obstructions in airflow. Computational fluid dynamics (CFD) simulations should be used to configure piping to minimize the impact on airflow through the floor. In slab data centers, piping is generally run over aisles and the supported ceiling structure, with drip pans under all fittings to minimize the potential impact of leaks.

Plumbing system installation also requires careful planning. Whether plumbing is running underfloor or overhead, installation has the potential to disrupt existing data center operations. A phased approach to deployment can minimize this disruption. In colocation, for example, operators are adding plumbing to one or two suites to meet initial demand with plans to expand to additional suites as demand increases. The same approach is being employed in the enterprise where a corner section of the data center may be devoted to liquid-cooled racks with plans to expand in the future.

The Secondary Cooling Loop

When supporting liquid cooling, the best practice is to establish a secondary cooling loop in the facility that allows precise control of the liquid being distributed to the rack. The key component in this loop is the cooling distribution unit (CDU).

CDUs deliver fluid to liquid-cooling systems and remove heat from the fluid being used. By separating the liquid-cooling system from the facility water system, the CDU provides more precise control of fluid volumes and pressure to minimize the potential impact of any leaks that may occur. They can also maintain supply temperature above the data center dew point to prevent condensation, which can trigger false alarms in leak detection systems and corrosion on uninsulated or poorly insulated plumbing.

For smaller projects, a CDU with a liquid-to-air heat exchanger can simplify deployment, assuming the air-cooling system has the capacity to handle the heat rejected from the CDU. In most cases, the CDU will use a liquid-to-liquid heat exchanger to capture the heat returned from the racks and reject it through the chilled water system. While CDUs can be positioned on the perimeter of the data center, most units are designed to fit within the row so they can be in proximity to the racks they support.

Balancing Capacity Between Air- and Liquid-Cooling Systems

When planning a liquid-cooling deployment in an air-cooled data center, it’s necessary to determine how much of the total heat load each system will handle, how much air-cooling capacity the liquid system will displace, and where liquid cooling may be introducing new demands on the air-cooling system.

In most applications, some air-cooling capacity is required to support the liquid cooling system — only immersion cooling systems can operate with minimal support from air cooling. The immersion tanks’ supply and return piping will radiate some heat, which needs to be removed to keep the room at set point. Direct-to-chip cold plates are typically only installed on the main heat-generating components within the rack — central processing units (CPUs), graphics processing units (GPUs), and memory — and can only remove between 70%-80% of the heat generated by the rack. In a 30-kW rack, this means 6-9 kW of the load must be managed by the air-cooling system. Rear-door heat exchangers can remove 100% of the heat load from the rack, but, because these systems expel cooled air into the data center through the rear of the rack, the air-cooling system must have the capacity to handle the full heat load of the rack during periods when the rear door is open for maintenance.

Leak Detection and Mitigation

A leak-detection system should be considered an essential component of every liquid cooling system and should be integrated into the system design. The robustness of a particular system can be tailored to an organization’s comfort level with liquid cooling technology and risk tolerance.

Some organizations rely on indirect methods of leak detection in which pressures and flow are monitored across the fluid distribution system, and small changes in these precisely controlled variables are considered indicative of a potential leak. More commonly, direct leak-detection systems are employed. Using strategically located sensors, or a cable that can detect leaks across the distribution system, these hardware systems trigger alarms when fluids are detected, enabling early intervention.

The key to successful leak detection is minimizing false alarms without compromising the system’s ability to detect actual leaks that require intervention. Your infrastructure partner can help configure and tune the leak-detection system to your application. Intervention is usually performed manually, but automated intervention systems are available. In an automated system, the control system triggers appropriate responses when a leak is detected, such as shutting off liquid flow or if the leak is close to the rack, powering down IT equipment.

The Hybrid Data Center

As more organizations seek to leverage the power of AI, more data centers will need to support high-density racks. Liquid cooling offers a viable approach to protecting the performance and availability of the applications those racks support, and hybrid air- and liquid-cooled data centers will become more common. Liquid cooling does, however, introduce a new set of challenges in data center design, and experience deploying liquid cooling in air-cooled facilities is still somewhat limited. In the early stages of the move to hybrid air- and liquid-cooled data centers, it is particularly important to work with vendors and contractors that have a proven track record in liquid cooling.