FIGURE 1. History of heat dissipation techniques (A. Kaveh, Electronics Cooling Magazine, January 2000).

Environmental control in critical facilities faces a few hurdles, but here’s one you don’t hear much: We just haven’t been doing it that long. Learning about real effects of different conditions on equipment is another key to saving energy and avoiding design overkill. As the author explains, a little context balanced with a commitment to precision criteria will go a long way in this environment.

Humidity control in data centers and other critical facilities, where the regulation of the environmental conditions is essential to the successful outcome of the process, is one of the more misunderstood aspects of the HVAC design for these buildings. Why is this? Addressing the following points will help clarify the issues.

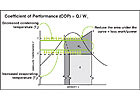

FIGURE 2. Increasing evaporating temperature (supply air temperature) reduces the amount of power needed by the compressors in central cooling equipment.

Point 1

The Great UnknownCooling of computers and other sensitive electronic equipment is still a niche industry; further commercialization of techniques and products is required.

Interestingly, one of the first commercial applications for A/C came in 1906 and was used for simultaneously maintaining temperature and humidity for a printing operation (not too far afield from modern-day data processing). After then, up until the middle of the century when demand grew for the use of A/C for comfort cooling applications, it was used for industries such as textiles, food processing and packaging, film processing, and other processes where fluctuations in temperature and humidity could have adverse effects.

Challenges in cooling electronic equipment started in the 1960s1with the advent of electronic data systems used in first in military applications and space exploration, and later in banking and financial processing. Development of standards and design techniques for cooling electronics using commercially available environmental control equipment has continually been refined in the last 20 years. However, the most significant advances in reliability, power efficiency, and dealing with high-density computer installations have really only occurred in the last 10 years2.

So, relative to when A/C systems were first used, providing environmental control in a data centers is still in its early stages of development. With this said, it is understandable why there are still many gray areas that have not yet been standardized, especially with the overlay that computer technology itself continues to evolve at a very rapid pace.

As early researchers and inventors of A/C processes and equipment discovered, adding or removing moisture to or from the air in a data center (or any other facility type) is inextricably linked to the cooling process. Changing the moisture content or the temperature of the air requires energy. The amount of electricity (or other fuel source) that is required depends not only on the efficiency of the equipment, but also on the basic design criteria being used.

Although there is now a substantial amount of valuable design information on cooling systems for data centers, rules of thumb and outdated design criteria are still too often used (especially in the early conceptual design phases), leading to incorrect design assumptions and approaches which will often hinder the performance of the data center and increase power usage.

(Note: This is not meant as an indictment of HVAC designers who do not specialize in data center cooling techniques, but rather a challenge to those who do - they need to continue to work toward the development of best practices in design, commissioning, and operations of data center cooling systems, which will benefit everyone involved.)

Point 2

The Real Effect On EquipmentMore data is required on the failure mechanisms of electronics due to temperature and humidity levels (including both steady state and transient conditions).

Most computer servers, storage devices, networking gear, etc., will come with an operating manual that stipulates environmental conditions with a range something like 20% to 80% non-condensing rh and a recommended operation range of 40% to 55% rh. What is the difference between maximum and recommended? It has to do with prolonging the life of the equipment and avoiding failures due to electrostatic discharge (ESD) and corrosion failure that can come from out-of-range humidity levels in the facility.

However, there is little, if any, industry-accepted data on what the projected service life reduction would be based on varying humidity levels. In conjunction with this, the use of outside air for cooling will reduce the power consumption of the cooling system, but with outside air comes dust, dirt, and wide swings in moisture content during the course of a year. These particles can accumulate on and in between electronic components, resulting in electrical short circuits.

Also, accumulation of particulate matter can alter airflow distribution and thus adversely affect thermal performance3. Actual field-collected data on failures related to temperature and humidity, as well as dust and other particulates would really help shed light on how to solve these design questions. This data is necessary when looking at the first cost of the computer equipment as compared to the ongoing expense of operating a very tightly controlled facility.

Certainly placing computers in an environment that can cause unexpected failures is not acceptable. However, if the computers are envisioned to have a three-year in-service life and relaxing stringent environmental requirements will not cause a reduction in this service life, a data center owner may opt to save ongoing operating expense stemming from strict control of temperature and humidity levels.

This seems easier said than done, however. There is published data on failure mechanisms of electronic equipment, such as MIL-HDBK-217, “Reliability Prediction of Electronic Equipment,” but much more research is required to determine the influence of the many interdependent factors, such as thermo-mechanical, EMC, vibration, humidity, and temperature4. Also, the rates of change of each of these factors, not just the steady state conditions, will have an impact on the failure mode.

Finally, a majority of failures occur at “interface points” and not necessarily of a component itself. Translated, this means contact points such as soldering often cause failures. So it becomes quite the difficult task for a computer manufacturer to accurately predict distinct failure mechanisms, since the computer itself is made up of many sub-systems developed and tested by other manufacturers.

TABLE 1. Conditions of air at the inlet to computer equipment (Thermal Guidelines for Data Processing Environments, ASHRAE, ©2004).

Point 3

A Savings ExampleDesign of HVAC systems for data centers requires a close look at the psychrometric processes, fine-tuned by using simulation tools to evaluate inherent transient conditions.

There is much discussion taking place in the data center industry on the optimization of the temperature and moisture content of the air entering into computers, storage devices, networking gear, and other equipment. The current ASHRAE guidelines provide data on the range of acceptable inlet conditions. These conditions are summarized in Table 1.

An important point of this information is that the recommended conditions of the air are at the inlet to the computer. There are a number of legacy data centers (and many still in design) that produce air much colder than what is required by the computers. Also, the air will most often be saturated (cooled to the same value as the dewpoint of the air) and will require the addition of moisture in the form of humidification in order to get it back to the required conditions. This cycle is very energy intensive and does nothing to improve the environmental conditions that the computers operate in.

Also, the use of rh as a metric in data center design is somewhat misleading. Relative humidity (as the name implies) changes as the drybulb temperature of the air changes. If the upper and lower limits of temperature and rh are plotted on a psychrometric chart, the dewpoint temperatures range from approximately 43°F to 59°, and the humidity ratios range from approximately 40 grains/lb to 78 grains/lb.

It is important to establish precise criteria on not only the temperature but also the dewpoint temperature or humidity ratio of the air at the inlet of the computer. This would eliminate any confusion of what rh value to use at which temperature. This may be complicated by the fact that most cooling and humidification equipment is controlled by rh, and most operators have a better feel for rh vs. grains/lb as an operating parameter. Changes in how equipment is specified and controlled will be needed to fully use dewpoint or humidity ratio as a means for measurement and control.

So what impact does this have on data center operations? The main impact comes in the form of increased energy use, equipment cycling, and quite often, simultaneous cooling/dehumidification and reheating/humidification. Discharging air at 55° from the coils in an AHU is common practice in HVAC industry, especially in legacy data centers.

Why? Because typical room conditions for comfort cooling during the summer months are generally around 75° and 50% rh. The dewpoint at these conditions is 55°, so the air will be delivered to the conditioned space at 55°. The air warms up (typically 20°) due to the sensible heat load in the conditioned space and is returned to the AHU. It will then be mixed with warmer, more humid outside air and sent back to flow over the cooling coil. The air is then cooled and dried to a comfortable level for human occupants and supplied back to the conditioned space. While this works pretty well for office buildings, it does not transfer as well to data center design.

Using this same process description for an efficient data center cooling application, it would be modified as follows: Since the air being supplied to the computer equipment needs to be (as an example) 78° and 40% rh, the air being delivered to the conditioned space would be able to range from 68° to 73°, accounting for safety margins due to unexpected mixing of air resulting from improper air management techniques. (The air temperature could be higher with strict airflow management, using enclosed cold aisles or cabinets that have provisions for internal thermal management).

The air warms up (typically 20° to 25°), due to the sensible heat load in the conditioned space and is returned to the AHU. (Although the discharge temperature of the computer is not of concern to the computer’s performance, high discharge temperatures need to be carefully analyzed to prevent thermal runaway during a loss of cooling as well as the effects of the high temperatures on the data center operators when working behind the equipment). It will then be mixed with warmer, more humid outside air and is sent back to flow over the cooling coil (or there is a separate AHU for supplying outside air). The air is then cooled down and returned to the conditioned space.

What is the difference in these two examples? All else being equal, the total air conditioning load in the two examples will be the same. However, the power used by the central cooling equipment in the first case will be close to 50% greater than that of the second. This is due to the fact that much more energy is needed to produce 55° air vs. 75° air (Figure 2). Also, if higher supply air temperatures are used, the hours for using outdoor air for either air economizer or water economizer can be extended significantly. This includes the use of more humid air that would normally be below the dewpoint of the coil using 55° discharge air.

Similarly, if the rh or humidity ratio requirements were lowered in cool and dry climates that are ideal for using outside air for cooling, more hours of the year could be used to reduce the load on the central cooling system without having to add moisture to the airstream.

Conclusion

Understanding the psychrometric processes that underpin HVAC system performance is critically important in developing successful system design and control strategies for data centers. Continual refinement of standards and best practices specific to data centers is needed to make advancements in optimizing design and operation. Education of data center designers and operators, as well as close collaboration of the computer manufacturers will be required, however, to fully realize the benefits of these advancements.ESCITED WORKS

1. Kaveh, A. “The History of Power Dissipation.” Electronics Cooling Magazine. January, 2000.2. Schmidt, R. R. and B. D. Notohardjono. “High End Server Low Temperature Cooling.”IBM Research and Development Magazine. November, 2002.

3. Woolfold, Allen. “Specifying Filters for Forced Convection Cooling.”Electronics Cooling Magazine. October, 1995.

4. Parry, John, Jukka Rantala, and Clemens Lasance. “Temperature and Reliability in Electronics Systems - The Missing Link.”Electronics Cooling Magazine, November, 2001.